During our ongoing integration work with Kubernetes and Azure Service Bus, we’ve encountered a message processing conflict. During peak traffic, Kubernetes’ dynamic scaling led to multiple consumer instances processing messages concurrently. This caused a critical issue: newer data was sometimes being overwritten by older, delayed updates, compromising our data integrity. This situation is providing valuable insights into the necessity of Azure Service Bus message sessions for maintaining order in distributed systems, and I’m documenting some key takeaways from this learning.

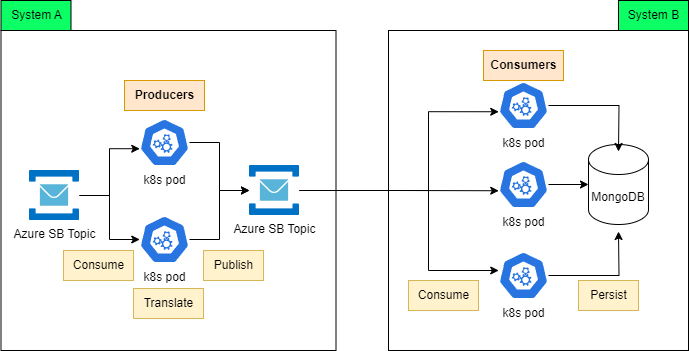

The following diagram illustrates the integration:

As seen in the diagram, there are number of components in System A which will modify an entity and produces entity change events to System A first Azure SB. Producers will handle, translate and publish to second Azure SB.

For each events that consumers in System B receives, it will read, verify and persist the entity changes to MongoDB. Apart from the consumers, there are other components that also read/write to MongoDB when they receives events from other upstream systems.

Everything is smooth sailing when number of events are low but during peak time, consumers start to hammer MongoDB with read/write operation. While indexing and query optimization has been put in place, occasionally, there are some operations take about 5 or more minutes to be completed. This resulted in new update being override by old one after 5 minutes.

In the spirit of collaborative engineering, we’re pulling a few potential solution options out of our toolbox.

What are some of the options?

- We initially explored MongoDB Transaction. However, this proved unsuitable due to the complexity of multiple write operations per event, coupled with potential 5-minute delays during high load. Furthermore, our team lacked deep expertise in MongoDB transactions, making implementation risky within our time constraints.

- Since current documents has

versionproperty, our next option is to explore document versioning. We also have to abandon this because the repository class are shared by number of components and time to regression test all of them can be substantial. In addition to that, we need support from the team (SME) that look after this system and sadly, their time has been occupied with resolving production incidents. - Third, we considered raising a support ticket with MongoDB to collaborate with their engineers, given that this issue was not unique to our consumer. Provided that cost, time, and SME capacity were not constraints, this would be a preferred option.

- Last but not least, the option that we decide to explore, is to change the Azure Service Bus topic subscription to session based.

Time for a bit of Googling and then, Copiloting

Exploring Azure Service Bus message sessions started with a simple Google search, which led me to this invaluable resource: https://learn.microsoft.com/en-us/azure/service-bus-messaging/message-sessions. From there, my learning journey began.

To help me understand the documentation, I created 2 console programs, one as sender and another as listener with Azure.Messaging.ServiceBus package. For listener, ServiceBusSessionProcessor is created through ServiceBusClient.CreateSessionProcessor method. Additionally, I configured Verbose custom logging for Azure.Messaging.ServiceBus.

I would like to share key takeaways from the following three items:

- Session lock renewal

- Message TTL

- Storage size associated with session state

Session Lock Renewal

Once ServiceBusSessionProcessor.StartProcessingAsync is called, by default, it will wait for 1 minute for any incoming message. If there is no message, retry policy will kick in and we can configured it using ServiceBusRetryOptions.

When messages are received, processor will lock the session and during this period, other listener is not able to process message from this session. The default value for the lock duration is 1 minute. You can specify a different value for the lock duration at the queue or subscription level.

If MaxAutoLockRenewalDuration is configured (default 5 minutes), internally, Azure SDK will renew its session until processing is completed or MaxAutoLockRenewalDuration has been reached. Duration on how often it is renewed dependent on the message lock duration. If you are curious on how this is handled, check out the code for SessionReceiverManager and ReceiverManager – CalculateRenewDelay.

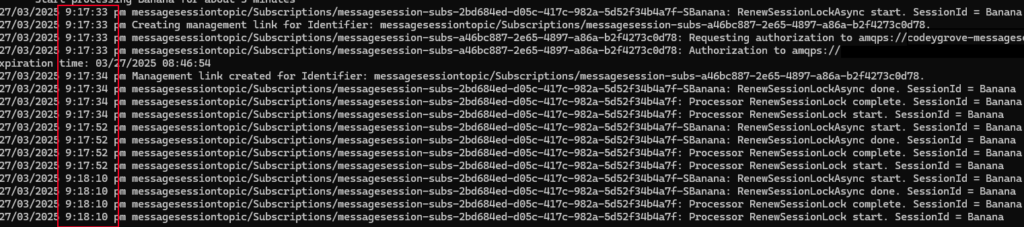

Below is the screenshot when lock duration is 30 seconds.

What I uncovered was the potential for concurrent processing. Specifically, because session lock renewal operates as a background process, prolonged message processing by a listener triggers session lock expiration. Consequently, another listener could then seize the session and initiate processing of the same message, even as the original listener continues its operation. This scenario introduces a substantial risk of data duplication and processing conflicts, demanding careful mitigation.

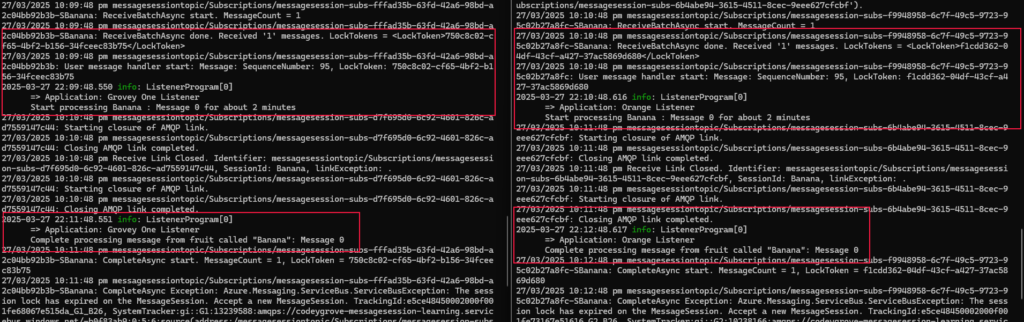

Below screenshot highlight the issue (For this test, there is no lock renewal and lock duration is 1 minute)

- Started two listener, Grovey One and Orange

- Grovey One lock the session and start processing message at 22:09 and Delay for 2 minutes

- Session lock is lost by Grovey One but still processing the message

- Orange locked the session and start processing at 22:10 and Delay for 2 minutes

- Session lock is lost by Orange but still processing the message

- And so on ….

Documentation recommends to set the lock duration to something higher than normal processing time, so we don’t have to renew the lock. Regardless of using auto renewal or extending lock duration, always keep in mind the implication and have a backup plan to handle it for your specific scenario.

Message Time To Live

For message TTL, my objective was to understand how the message expiration work in a session based subscription. As mentioned in this documentation, “If there’s a single message in the session that has passed the TTL, all the messages in the session are expired. The messages expire only if there’s an active listener”. I believe “active listener” is crucial in this context. Unfortunately, I can’t re-produce the behavior mentioned in the page.

This is what I did:

- Increase the subscription “Maximum delivery count” and “Message TTL”

- Create a new listener to

- Receive incoming message and

Task.Delayfor 3 seconds - Abandon the message

- Receive incoming message and

- Sender will send the following messages

- First 30 seconds, send out a message with TTL of 1 minute every one second

Task.Delayfor 5 seconds- Next 30 seconds, send out a message with TTL of 5 minutes

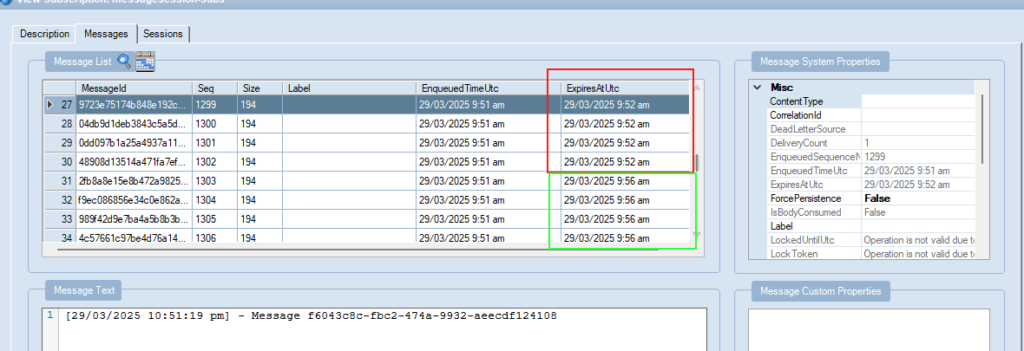

- This is what is shown in the Service Bus Explorer

The expectation that I had based on the documentation, at 09/03/2025 9:52 am or 9:53 am, all messages in that session will be expired.

On the first round of test, as the listener consumes and abandons the first message, I keep refreshing the explorer. When the first expiry time is reached, each single message is DLQ one at a time as long as the TTL has passed. Any message where TTL is not yet reached remains in the Session and listener continue to consume the message.

For next subsequent tests, I stopped the listener after it rans for 30 seconds and monitor the number of messages in Service Bus. After expiry time pass 10 seconds, I restarted the Listener. Instead of message being DLQ one at a time, explorer shows that all messages passed TTL are DLQ at once.

My testing revealed a clear discrepancy between the documented behavior of message TTL in session-enabled subscriptions and the actual observed behavior. It appears there are undocumented mechanisms at play, or the definition of ‘active listener’ requires further clarification. Therefore, I strongly encourage readers to share any insights or experiences they’ve had with this particular aspect of Service Bus.

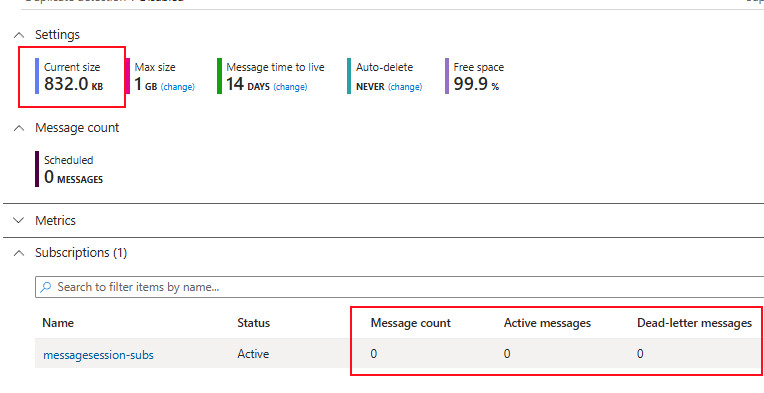

Storage size associated with session state

Last but not least, if your application is using session state to preserve and manage application-specific data related to a message session, it’s recommended for the application to clean up its retained state to avoid external management cost.

“External management cost” in the context of Azure Service Bus session state refers to the cumulative financial and operational burdens that arise from neglecting to clean up session data. It’s not a direct, separate billing item, but rather the added expenses and complexities stemming from increased storage usage, potential performance degradation, and the need for manual cleanup processes. By allowing session state to accumulate unnecessarily, you risk exceeding storage quotas, incurring higher Azure costs, and adding operational overhead for troubleshooting and data management, all of which ultimately impact your application’s efficiency and budget.

For this experiment, I modify the sender to send a message every 1 minute for the next 15 minutes. Each message will have an incremental session id, i.e.: 0 – 14. On the listener, when processing an incoming message, call SetSessionStateAsync to set a dummy state with 256 * 256 bytes of binary data and complete the message without clearing the session state.

As seen in the screenshot below, there is no messages in the topic. Nevertheless, because the application did not clean up the state, it counts towards that topic’s storage quota. Imagine the ramifications if multiple subscriptions, each with session-enabled consumers, neglect to clean up their session states. This could lead to a rapid accumulation of storage, potentially exceeding entity quotas and incurring unexpected Azure costs. Moreover, diagnosing and resolving such storage issues can add significant operational overhead.

In Conclusion

My journey into Azure Service Bus message sessions revealed critical insights into session lock management, message TTL behavior, and the importance of session state cleanup. By understanding these nuances, I can build more robust and reliable distributed systems. As I continue to navigate the complexities of cloud-based messaging, sharing our experiences and insights becomes invaluable for the wider developer community. Have you encountered similar challenges with Azure Service Bus sessions? Share your experiences in the comments below!

Leave a Reply