Welcome back to my series on MongoDB “gotchas” for .NET developers! In my previous post, I shared a surprising lesson about how MongoDB’s schema flexibility and the .NET driver’s default deserialization can lead to unexpected errors if you’re not careful with evolving your data models and using [BsonIgnoreExtraElements].

Today, I want to dive into an even more subtle and insidious issue that cropped up with array updates and dot notation. This one left us scratching our heads for a while and revealed a hidden behavior of MongoDB’s type inference that can silently corrupt your data structure. The chilling part? We only caught this one during UAT, highlighting the importance of comprehensive testing even with NoSQL databases.

The Power of Dot Notation: A Double-Edged Sword (Again)

One of MongoDB’s great strengths lies in its ability to directly access and modify nested fields within a document using dot notation. This capability is incredibly powerful for efficient updates, allowing you to target specific parts of a document without needing to fetch the entire document, modify it locally, and then send it back. For example, updating a simple field like DeliveryDetail.PostCode is straightforward and performant using the .NET driver’s strongly-typed syntax, which internally translate to MongoDB’s dot notation:

// Example of a simple dot notation update

var filter = Builders<Order>.Filter.Eq(o => o.Id, "someOrderId");

var update = Builders<Order>.Update.Set(o => o.DeliveryDetail.PostCode, "UpdatedPostCode");

await _ordersCollection.UpdateOneAsync(filter, update);This C# code translates to a MongoDB update operation that uses dot notation like this:

// Equivalent MongoDB shell update

db.orders.updateOne(

{ _id: "someOrderId" },

{ $set: { "DeliveryDetail.PostCode": "UpdatedPostCode" } }

);This directness extends to arrays as well, allowing you to update specific elements or sub-documents within arrays. This is precisely where the flexibility can turn into a trap if not wielded with precision. To learn about dot notation, refer to https://www.mongodb.com/docs/manual/core/document/#dot-notation.

The Gotcha: When a Seemingly Logical Refactor Corrupts Your Arrays

Let’s illustrate this gotcha with a simplified example that mirrors a real-world scenario. We’re working with our Order document, which contains nested DeliveryDetail and OrderDetail subdocuments. The OrderDetail in turn holds an array of OrderItem objects:

// Relevant POCOs

using MongoDB.Bson;

using MongoDB.Bson.Serialization.Attributes;

namespace MongoDbLearning.Common

{

// POCO classes for your document structure

[BsonIgnoreExtraElements] // Learned this lesson from my last post!

public class Order

{

[BsonId]

public string Id { get; set; }

public DateTime OrderDate { get; set; }

public DeliveryDetail DeliveryDetail { get; set; }

public OrderDetail OrderDetail { get; set; } // Contains OrderItems array

}

public class OrderDetail

{

public OrderItem[] OrderItems { get; set; } // Array of OrderItem

public int TotalItem { get; set; }

public decimal TotalPrice { get; set; }

}

public class OrderItem

{

public string CatalogItemId { get; set; }

public int OrderedQuantity { get; set; }

public int SuppliedQuantity { get; set; }

}

public class DeliveryDetail

{

public string DeliveryAddress { get; set; }

public DateTime DeliveryDate { get; set; }

public string PostCode { get; set; }

public bool Delivered { get; set; }

public DateTime LastUpdated { get; set; }

}

}Initially, our Project A (Updater) handled saving and updating Order documents using a straightforward approach: replacing entire subdocuments when changes occurred. This looked something like SaveOrderDetailV1Async:

// In Project A (Updater) - Initial Approach

public async Task<int> SaveOrderDetailV1Async(Order orderPoco)

{

if (orderPoco == null) return 0;

var updateFilter = Builders<Order>.Filter.Eq("_id", orderPoco.Id);

var updateDefinition = Builders<Order>.Update

.Set(order => order.OrderDate, orderPoco.OrderDate);

if (orderPoco.DeliveryDetail != null)

{

updateDefinition = updateDefinition

.Set(order => order.DeliveryDetail, orderPoco.DeliveryDetail);

}

if (orderPoco.OrderDetail != null)

{

// Replaces the entire OrderDetail subdocument

updateDefinition = updateDefinition

.Set(order => order.OrderDetail, orderPoco.OrderDetail);

}

var result = await _ordersCollection.UpdateOneAsync(updateFilter, updateDefinition, new UpdateOptions() { IsUpsert = true });

return (int)result.ModifiedCount;

}SaveOrderDetailV1Async works well but like any application development, requirement changes and in our case, to run specific business logic whenever any array property was updated, we leverage on MongoDB Change Stream capability. In our example, it means whenever OrderedQuantity or SuppliedQuantity are updated, we want to be able to capture this change. For simplification purpose, we will assume that item cannot be added to or removed from OrderItems array.

Since SaveOrderDetailV1Async replaces the entire OrderDetail subdocument, this operation would trigger a MongoDB change stream event for the whole OrderDetail even if only minor, non-top-level changes occurred within OrderItems or if no items were actually changed. To ensure change streams only trigger for actual updates to OrderedQuantity or SuppliedQuantity, we refactored SaveOrderDetailV1Async into SaveOrderDetailV2Async.

// In Project A (Updater) - Refactored Approach (V2)

public async Task<int> SaveOrderDetailV2Async(Order orderPoco)

{

if (orderPoco == null)

{

return 0;

}

var updateFilter = Builders<Order>.Filter.Eq("_id", orderPoco.Id);

var updateDefinition = Builders<Order>.Update

.Set(order => order.OrderDate, orderPoco.OrderDate);

if (orderPoco.DeliveryDetail != null)

{

updateDefinition = updateDefinition

.Set(order => order.DeliveryDetail, orderPoco.DeliveryDetail);

}

// Now uses a helper method for OrderDetail updates

updateDefinition = CreateOrderDetailUpdateDefinition(orderPoco, updateDefinition);

var result = await _ordersCollection.UpdateOneAsync(updateFilter, updateDefinition, new UpdateOptions() { IsUpsert = true });

return (int)result.ModifiedCount;

}

private static UpdateDefinition<Order> CreateOrderDetailUpdateDefinition(Order orderPoco, UpdateDefinition<Order> updateDefinition)

{

if (orderPoco.OrderDetail != null)

{

const string orderDetailsPath = nameof(Order.OrderDetail);

updateDefinition = updateDefinition

.Set($"{orderDetailsPath}.{nameof(OrderDetail.TotalItem)}", orderPoco.OrderDetail.TotalItem)

.Set($"{orderDetailsPath}.{nameof(OrderDetail.TotalPrice)}", orderPoco.OrderDetail.TotalPrice);

// **THE PROBLEMATIC PART**

// This loop iterates through the OrderItems array and updates each item individually by index.

// If the OrderItems array is initially empty or doesn't exist in the database,

// this will create an object instead of an array.

// Unfortunately, in my case, I need this for the change stream

for (var i = 0; i < orderPoco.OrderDetail.OrderItems.Length; i++)

{

var orderItemPoco = orderPoco.OrderDetail.OrderItems[i];

var orderItemPath = $"{orderDetailsPath}.{nameof(OrderDetail.OrderItems)}.{i}";

updateDefinition = updateDefinition

.Set($"{orderItemPath}.{nameof(OrderItem.CatalogItemId)}", orderItemPoco.CatalogItemId)

.Set($"{orderItemPath}.{nameof(OrderItem.OrderedQuantity)}", orderItemPoco.OrderedQuantity)

.Set($"{orderItemPath}.{nameof(OrderItem.SuppliedQuantity)}", orderItemPoco.SuppliedQuantity);

}

}

return updateDefinition;

}The team in Project A (Updater) deployed this V2 method. New updates appeared to go through correctly. However, during UAT, Project B (Reader) suddenly started encountering FormatException for some Order documents.

System.FormatException: 'An error occurred while deserializing the OrderDetail property of class MongoDbLearning.Common.Order: An error occurred while deserializing the OrderItems property of class MongoDbLearning.Common.OrderDetail: Cannot deserialize a 'OrderItem[]' from BsonType 'Document'.'This error message was highly revealing: Project B was expecting an OrderItem[] (an array) but received a Document (an object) instead.

The Unexpected Outcome: When Your Array Becomes an Object!

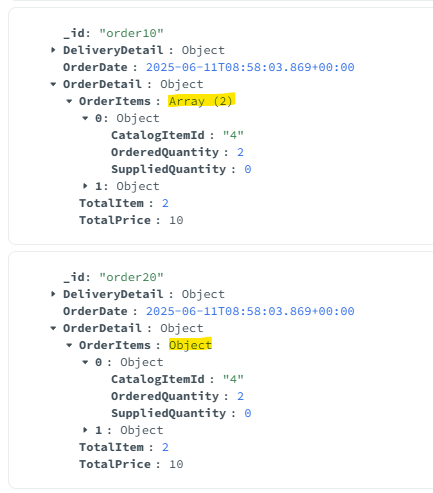

Upon investigation, we found the horrifying truth: for certain Order documents, the OrderItems field was no longer an array! It had been silently transformed into an object. This happened specifically when an Order document initially did not have the OrderDetail subdocument at all, or if its OrderItems array was null or absent

When SaveOrderDetailV2Async called CreateOrderDetailUpdateDefinition, and that function’s loop tried to $set properties at paths like "OrderDetail.OrderItems.0.CatalogItemId" on a document that didn’t have OrderDetail or OrderItems as an array already, MongoDB’s default behavior kicked in:

- It implicitly created

OrderDetailas an object. - Then, within

OrderDetail, it implicitly createdOrderItemsalso as an object, because"0"(the index) was interpreted as a field name within that newly created object, not an array index.

Here’s what an affected document looked like in MongoDB:

If document has OrderDetail:

{

"_id": "order10",

"DeliveryDetail": { /* ... */ },

"OrderDate": {

"$date": "2025-06-11T08:58:03.869Z"

},

"OrderDetail": {

"OrderItems": [

{

"CatalogItemId": "4",

"OrderedQuantity": 2,

"SuppliedQuantity": 0

},

{

"CatalogItemId": "2",

"OrderedQuantity": 2,

"SuppliedQuantity": 0

}

],

"TotalItem": 2,

"TotalPrice": {

"$numberDecimal": "10"

}

}

}Compare to document that does not have OrderDetail in the Order document:

{

"_id": "order20",

"DeliveryDetail": { /* ... */ },

"OrderDate": {

"$date": "2025-06-11T08:58:03.869Z"

},

"OrderDetail": {

"OrderItems": { // <-- UH OH! This is now an object, not an array!

"0": {

"CatalogItemId": "4",

"OrderedQuantity": 2,

"SuppliedQuantity": 0

},

"1": {

"CatalogItemId": "2",

"OrderedQuantity": 2,

"SuppliedQuantity": 0

}

},

"TotalItem": 2,

"TotalPrice": {

"$numberDecimal": "10"

}

}

}And if you are like me, using MongoDB Compass to explore your collections, this issue is easily overlooked. As seen in below screenshot, both document look the same but the type is different (and yes, it took me a while to notice that).

This nuance can be easily overlooked in the official documentation for dot notation https://www.mongodb.com/docs/manual/core/document/#dot-notation, or at least it wasn’t immediately apparent to us.

The Solution: Proactive Document Structuring and Explicit Array Operators

The core of this issue lies in MongoDB’s implicit type inference when a dot notation path attempts to set a value in a part of the document that doesn’t yet exist, particularly when that path implies an array. Our problem was exacerbated because the OrderDetail subdocument itself might have been missing in the existing document.

Given our specific requirement to leverage MongoDB Change Streams for granular updates (i.e., capturing changes to OrderedQuantity or SuppliedQuantity within specific OrderItem array elements, which requires the update operation itself to target those specific dotted paths), we faced a unique challenge. Directly using dot notation with numeric indices (OrderDetail.OrderItems.0.CatalogItemId) on a document where the OrderItems array didn’t pre-exist was the cause of the array-to-object corruption.

Step 1: My Specific Fix for Granular Change Stream Events

In our scenario, to enable our precise change stream logic, we needed to ensure the OrderItems field was always an array before attempting granular dot notation updates to its elements. The immediate fix was a conditional check: if OrderDetail (and by extension, its OrderItems array) was not present in the existing document, we would first explicitly set the entire OrderDetail POCO. This pre-initialization guarantees that OrderDetail is created as an object and OrderItems as an array (even if empty) before any fine-grained updates attempt to target specific array indices. This approach leverages our assumption that OrderItems will always exist within OrderDetail if OrderDetail is present.

if (orderPoco.OrderDetail != null)

{

var existingOrder = await GetOrderByIdAsync(orderPoco.Id);

if (existingOrder?.OrderDetail != null)

{

// If OrderDetail (and thus OrderItems as an array) already exists,

// we can proceed with granular dot notation updates as needed for change streams.

// This 'CreateOrderDetailUpdateDefinition' method *now safely* uses paths like

// "OrderDetail.OrderItems.0.CatalogItemId" because the array type is guaranteed.

updateDefinition = CreateOrderDetailUpdateDefinition(orderPoco, updateDefinition);

}

else

{

// If OrderDetail or OrderItems is missing, explicitly set the entire OrderDetail POCO.

// This ensures OrderDetail is an object and OrderItems is an array (even if empty).

updateDefinition = updateDefinition.Set(order => order.OrderDetail, orderPoco.OrderDetail);

}

}Step 2: General Best Practices for Array Manipulation (When No Specific Constraints Apply)

While my specific change stream requirement necessitates using dot notation with numeric indices (after ensuring array existence), it’s crucial for most scenarios to always use MongoDB’s explicit array update operators for granular array manipulation. These operators are designed to guarantee that the field is treated as an array and will either append, remove, or modify elements correctly, never implicitly changing the array’s type to an object. If you don’t have a requirement forcing dot notation like mine, these are the safer and preferred methods. For more information, refer to https://www.mongodb.com/docs/manual/reference/operator/update-array/.

Here’s how you should handle array updates when performing granular changes:

- To Update an Existing Item by Condition (Safest & Most Common)

Use the $ (positional operator) or $[<identifier>] (filtered positional operator) with $set. This ensures that OrderItems remains an array and only the matched element is modified. This requires a unique field within the array element to filter by (e.g., CatalogItemId).

public async Task<int> SaveOrderDetailV2Async(Order orderPoco)

{

/* Existing Code */

var arrayFilters = new List<ArrayFilterDefinition>();

if (orderPoco.OrderDetail != null)

{

var existingOrder = await GetOrderByIdAsync(orderPoco.Id);

if (existingOrder?.OrderDetail != null)

{

(updateDefinition, arrayFilters) = CreateOrderDetailUpdateDefinition(orderPoco, updateDefinition);

}

else

{

updateDefinition = updateDefinition

.Set(order => order.OrderDetail, orderPoco.OrderDetail);

}

}

var result = (arrayFilters.Count > 0) ?

await _ordersCollection.UpdateOneAsync(updateFilter, updateDefinition, new UpdateOptions() { IsUpsert = true, ArrayFilters = arrayFilters }) :

await _ordersCollection.UpdateOneAsync(updateFilter, updateDefinition, new UpdateOptions() { IsUpsert = true });

return (int)result.ModifiedCount;

}

private static (UpdateDefinition<Order>, List<ArrayFilterDefinition>) CreateOrderDetailUpdateDefinition(Order orderPoco, UpdateDefinition<Order> updateDefinition)

{

/* Existing Code */

var arrayFilters = new List<ArrayFilterDefinition>();

foreach (var orderItemPoco in orderPoco.OrderDetail.OrderItems)

{

// Define the identifier for the positional operator, e.g., 'item'

// Ensure that identifier is unique

string identifier = $"item{orderItemPoco.CatalogItemId}";

// Build the update definition for the specific item

// The update path now uses the filtered positional operator

updateDefinition = updateDefinition

.Set($"{orderDetailsPath}.{nameof(OrderDetail.OrderItems)}.$[{identifier}].{nameof(OrderItem.CatalogItemId)}", orderItemPoco.CatalogItemId)

.Set($"{orderDetailsPath}.{nameof(OrderDetail.OrderItems)}.$[{identifier}].{nameof(OrderItem.OrderedQuantity)}", orderItemPoco.OrderedQuantity)

.Set($"{orderDetailsPath}.{nameof(OrderDetail.OrderItems)}.$[{identifier}].{nameof(OrderItem.SuppliedQuantity)}", orderItemPoco.SuppliedQuantity);

// Add the array filter for the current item

// This tells MongoDB WHICH element in the OrderItems array should be updated

// where 'item' is the identifier we used in the update paths.

// The condition checks if the 'CatalogItemId' of the array element matches the one we want to update.

// Identifier needs to be unique

arrayFilters.Add(

new BsonDocumentArrayFilterDefinition<Order>(

new BsonDocument { { $"{identifier}.{nameof(OrderItem.CatalogItemId)}", orderItemPoco.CatalogItemId } }

)

);

}

return (updateDefinition, arrayFilters);

}- To Add a New Item

Use the $push operator. This will create the array if it doesn’t exist, or append to it if it does.

updateDefinition = updateDefinition

.Set(order => order.OrderDetail.TotalItem, orderPoco.OrderDetail.TotalItem)

.Set(order => order.OrderDetail.TotalPrice, orderPoco.OrderDetail.TotalPrice)

.Push(order => order.OrderDetail.OrderItems, orderPoco.OrderDetail.OrderItems[2]);- To Remove an Item

Use the $pull operator.

updateDefinition = updateDefinition

.Set(order => order.OrderDetail.TotalItem, orderPoco.OrderDetail.TotalItem)

.Set(order => order.OrderDetail.TotalPrice, orderPoco.OrderDetail.TotalPrice)

.PullFilter(order => order.OrderDetail.OrderItems, item => item.CatalogItemId == "9");Step 3: Prevention with Schema Validation

For critical array fields, you can also use MongoDB Schema Validation (from version 3.6+) to enforce that OrderItems must be an array. This provides a database-level safety net, preventing these implicit type conversions from ever being saved. While it adds a layer of configuration, it serves as a strong safeguard against data corruption, especially if your application code might inadvertently cause such issues.

var database = client.GetDatabase("MongoDbLearning");

_ordersCollection = database.GetCollection<Order>("orders");

var command = new BsonDocument

{

{ "collMod", "orders" },

{ "validator", new BsonDocument

{

{ "$jsonSchema", new BsonDocument

{

{ "bsonType", "object" },

// { "required", new BsonArray { "OrderDetail" } }, // To make OrderDetail to be a required property

{ "properties", new BsonDocument

{

{ "OrderDetail", new BsonDocument

{

{ "bsonType", "object" },

{ "required", new BsonArray { "OrderItems" } },

{ "properties", new BsonDocument

{

{ "OrderItems", new BsonDocument

{

{ "bsonType", "array" },

{ "description", "must be an array and contain OrderItem objects" },

{ "items", new BsonDocument

{

{ "bsonType", "object" },

{ "required", new BsonArray { "CatalogItemId", "OrderedQuantity" } },

{ "properties", new BsonDocument

{

{ "CatalogItemId", new BsonDocument { { "bsonType", "string" } } },

{ "OrderedQuantity", new BsonDocument { { "bsonType", "int" } } }

}

}

}

}

}

}

}

}

}

}

}

}

}

}

}

},

{ "validationLevel", "strict" }

};

database.RunCommand<BsonDocument>(command);If i run through the “gotcha” scenario again, .NET Driver will throw the following error:

MongoDB.Driver.MongoWriteException: 'A write operation resulted in an error. WriteError: { Category : "Uncategorized", Code : 121, Message : "Document failed validation", Details : "{ "failingDocumentId" : "order52", "details" : { "operatorName" : "$jsonSchema", "schemaRulesNotSatisfied" : [{ "operatorName" : "properties", "propertiesNotSatisfied" : [{ "propertyName" : "OrderDetail", "details" : [{ "operatorName" : "properties", "propertiesNotSatisfied" : [{ "propertyName" : "OrderItems", "description" : "must be an array and contain OrderItem objects", "details" : [{ "operatorName" : "bsonType", "specifiedAs" : { "bsonType" : "array" }, "reason" : "type did not match", "consideredValue" : { "0" : { "CatalogItemId" : "4", "OrderedQuantity" : 25, "SuppliedQuantity" : 0 }, "1" : { "CatalogItemId" : "2", "OrderedQuantity" : 20, "SuppliedQuantity" : 0 } }, "consideredType" : "object" }] }] }] }] }] } }" }.'If you are interested to know more about MongoDB Schema Validation, refer to https://www.mongodb.com/docs/manual/core/schema-validation/.

Wrapping Up

This second “gotcha” was a powerful reminder that MongoDB’s schema flexibility, while incredibly enabling, requires a deeper understanding of its implicit type inference and the specific tools (like array update operators) designed to safely manipulate complex data structures. The lesson learned here wasn’t just about dot notation, but about the critical importance of using the right tool for the job – proactive document structuring and explicit array operators for array manipulation – to ensure your data maintains its intended structure.

Understanding how the driver maps BSON documents to C# objects, and how to explicitly handle scenarios like unrecognized elements or ambiguous paths, is crucial. While the [BsonIgnoreExtraElements] solution from my last post is simple for deserialization tolerance, here, the lesson revolves around safe serialization and modification strategies to preserve intended types, even when specific architectural choices, like granular change stream events, limit the direct use of certain array operators.

This experience also underscored the invaluable role of thorough UAT. It’s a reminder that subtle behaviors, especially those not immediately apparent in development, can have significant impacts.

I hope these two “gotcha” stories help you navigate your own MongoDB journey. Regarding the specific behavior of dot notation – where attempting to $set a nested field within a non-existent array path implicitly creates an object (with numeric keys) instead of an array – was this a surprise to you too? Or have I missed a crucial piece of documentation that explains this nuance more explicitly? I’m genuinely keen to hear your experiences, alternative perspectives, or if there’s a different way to understand this, so please share your thoughts in the comments below!

In my next post, I’ll delve into how this newfound understanding of precise, granular updates becomes especially important when working with MongoDB Change Streams and ensuring your real-time data flows accurately. Stay tuned!

Leave a Reply