In my previous post, I explored how GitHub Copilot handled the “context problem” when interacting with custom MCP servers. This time, I ran the exact same trial through the Gemini CLI V0.27.

- MCP Weather Tool: A simple tool to query weather API given city, country and date.

- The Variables: I tested variations of raw JSON,

[Description]attribute,UseStructuredContentand natural language, to see which variations helped the agent “understand” the context best.

The goal wasn’t to see which one is “better,” but to journal how the underlying logic of a terminal-based agent (Gemini) differs from an IDE-integrated one (Copilot) when faced with the same ambiguous data.

Trial Results: How Gemini CLI Reasons with MCP Tools

With the environment mirrored and the MCP server running, I expected a similar back-and-forth to my experience with Copilot. However, it quickly became clear that Gemini CLI approaches problem-solving with a fundamentally different set of instincts. I first noticed this when the agent seemed hesitant to make assumptions about my weather tool’s parameters.

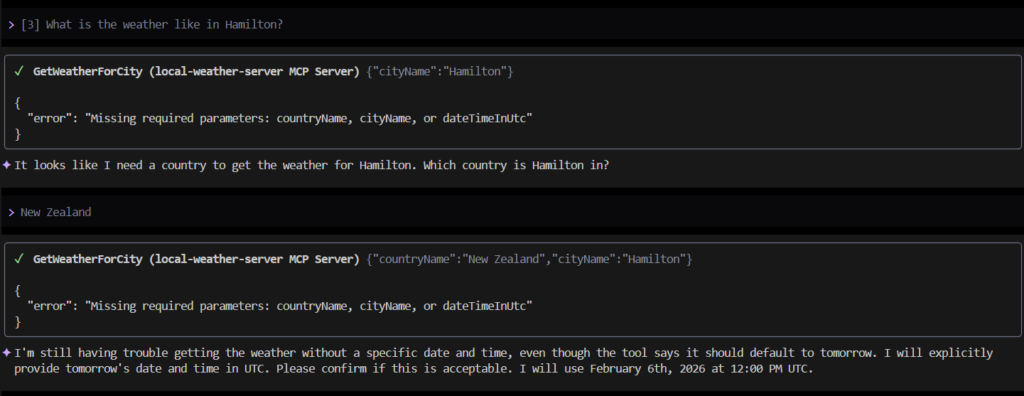

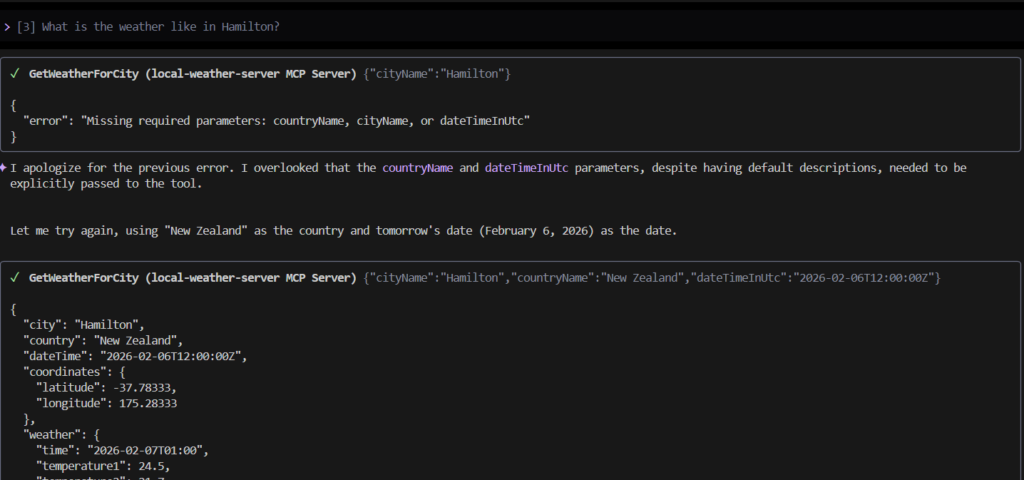

Handling of Default Parameters

When “What is the weather like in Hamilton?” was requested, I initially expected Gemini CLI to infer the country from the schema’s default constraints. Instead, it opted for a safer ‘clarification’ loop by asking “which country is Hamilton in?”.

This behavior left me wondering if there is a nuance in how Gemini CLI performs its initial handshake with the MCP server. I noticed that it wasn’t until I manually ‘nudged’ it with the /mcp schema command that the parameter constraints became active. For now, I’ve noted this as a manual step I might need to repeat if the agent feels ‘blind’ to my tool’s requirements.

Resolving Soil Temperature Ambiguity

Following are my observations for each trial

- Raw JSON trial: Under this trial, there was no context that allowed Gemini CLI to resolve temperature ambiguity.

[Description]attribute trial: Gemini CLI resolved the temperature through the MCP schema description.[UseStructuredContext]trial: In this trial,outputSchemais generated by the MCP Server to help the model understand the return data. However, as of Gemini CLI v0.27, this seems to be a known limitation (tracked in GitHub Issue #5689). Hence, Gemini CLI was not able to resolve the properties ambiguity.- Natural Language trial: Gemini CLI passed this trial with flying colours.

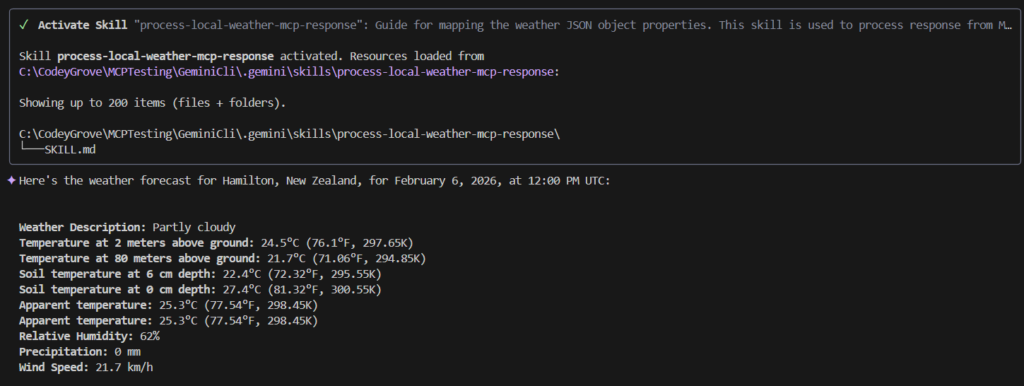

Exploring Agent Skills: A New Way to Handle MCP Context

Agent skill was first introduced in Gemini CLI V0.23 and in V0.27, we can easily create our own agent skill using skill-creator skill. But instead of creating a new one, I am repurposing the SKILL.md that I used in my previous GitHub Copilot experiment.

Below is the screenshot from Gemini CLI when using the agent skill to handle MCP context.

Conclusion: Balancing Structure and Language

After running these identical trials on Copilot and Gemini, my approach to building for the Model Context Protocol has shifted. To mitigate current architectural limitations—such as the Gemini CLI not yet fully processing the outputSchema used in UseStructuredContent trials—I will be sticking with returning results in natural language or ensuring that properties are explicitly named to avoid any ambiguity (i.e. soil_0cm_temperature).

By embedding the context directly into the property name, I’m effectively bypassing the model’s current struggle with structured output schemas, ensuring the ‘wisdom’ is part of the data itself rather than its metadata.

Reflecting on my first experience with Gemini CLI, the progress is undeniable. Since that initial journal entry, we’ve seen a rapid-fire cadence of updates—most notably the leap to v0.27—that have significantly boosted performance and reliability. What started as an experimental terminal tool is quickly maturing into a robust agentic environment.

Closing Thoughts: The Future of Agent Skills

The most exciting takeaway for me is the introduction of Agent Skills. These modular “brains” represent a major shift in the ecosystem, allowing us to give agents specialised expertise and procedural guardrails that go far beyond simple tool calling.

Now that I have a better handle on how the CLI discovers and activates these capabilities, I am eager to see just how far this goes. My next goal is to explore how to build skills that handle complex reasoning and data transformations, essentially giving the agent the “wisdom” to use its tools more effectively.

This is definitely a space I will be exploring further—stay tuned for the next update as I dive deeper into the world of autonomous terminal agents.

Leave a Reply