The world of software development is abuzz with the promise of AI agents—tools that can understand our intent, write code, and even debug complex systems. As a developer, I’ve been eager to dive in and see how these agents stand up to real-world challenges. My journey began with the Cursor code editor, a platform that claims to offer a powerful AI-driven coding experience.

While my broader goal is to explore modern distributed frameworks, I first wanted to explore the extent to which AI code editor like Cursor could assist in building the foundational components of any distributed system. This blog chronicles that preliminary journey.

The AI Hype is Real: My Starting Point

The excitement around AI agents isn’t just marketing—it represents a paradigm shift. Imagine a coding assistant that doesn’t just auto-complete your lines but understands your entire codebase, suggests architectural improvements, and even fixes bugs proactively. Cursor has positioned itself at the forefront of this revolution, and I was keen to put its “agent mode” to the test. My goal? To build a basic—but functional—distributed application. Could Cursor’s AI agent truly accelerate this complex undertaking?

Laying the Groundwork: Context is King

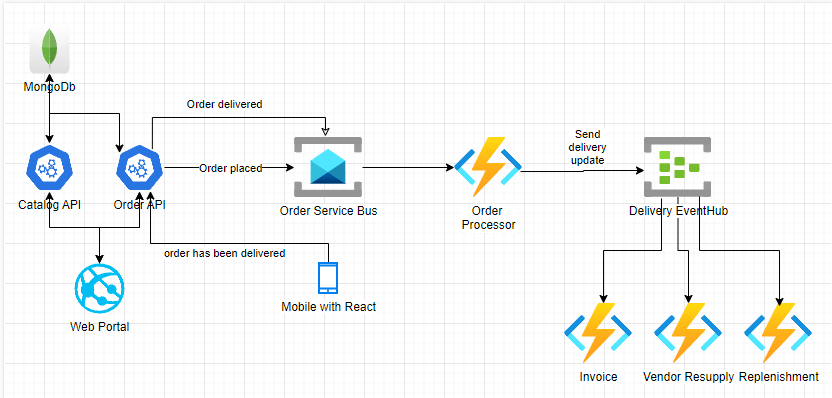

To provide context before unleashing the AI agent, here’s an overview of the distributed architecture we aimed to build:

The architecture consists of:

- A web portal (CRUD for catalogues/orders, MongoDB-backed) which includes a button to simulate an order placed event by creating an order with random catalogue items.

- A React-based mobile application to allow drivers to mark orders as delivered

- Service bus consumer, the order processor, that processes incoming order placed and delivered events from the Order API.

- When an order is placed, the processor will log to the console.

- When an order is delivered, the processor will send the order to an event hub.

- Three event hub consumers that process order delivered events from the order processor and log to the console.

Recognising that—much like a human developer—an AI requires a deep understanding of the problem domain, desired architecture, and existing data models, providing ample context was crucial. To set Cursor up for success, here’s a glimpse into the architectural blueprints I provided to Cursor:

- High-level Architecture of a Distributed System: An image file that visually depicted the overall system components and their interactions (an attachment as part of the instruction because Cursor cannot directly read a binary image file in a folder). While Cursor can interpret information from images, I still found it essential to complement the image with detailed textual descriptions of component interactions and specific behaviours.

- Mermaid Chart: Order and Catalogue Class Model: This detailed the core business entities and their relationships. A clear data model is paramount for any application, and providing this upfront aimed to guide the agent in generating appropriate data structures and persistence logic.

- Mermaid Chart: Basic Workflow of Component Interaction: A simplified flow diagram illustrating how different parts of the distributed system would communicate. This helped establish the communication patterns and dependencies between services.

- Mermaid Chart: Logical Architecture: This diagram provided a higher-level view of the application’s logical layers and how they would be organised.

- Sample JSON File: Order and Catalogue Model: A concrete example of the data structure for orders and catalogue items. This reinforced the class model and provided a tangible representation for the AI to learn from.

My hope was that this comprehensive context would enable Cursor to hit the ground running, minimising ambiguity and maximising its ability to generate meaningful code for a basic distributed application.

A Dialogue with the Agent: Hits, Misses, and Human Interventions

A key takeaway from this experiment is that, to get the best results from an AI coding agent like Cursor, it’s essential to plan ahead and provide detailed instructions. Think of it as translating the precise code you have in mind into clear, human-readable language. The more specific and structured your prompts, the more likely the AI is to generate exactly what you need, minimising rework.

My interaction with Cursor’s AI agent was a fascinating mix of awe and frustration. While its ability to grasp context and generate boilerplate code was impressive, there were several instances where human guidance, and even repetitive prompting, were necessary.

Upon providing the initial set of architectural diagrams and sample JSON, Cursor demonstrated a solid initial understanding of the system’s distributed nature and data models. It successfully summarised the high-level architecture, component interactions, and the data structures. It recognised the event-driven patterns and separation of concerns from the Mermaid diagrams.

When I challenged it to “Create the whole components for me in the src folder” as a C# .NET solution, specifying Web APIs, an MVC Web Portal, and Worker services for consumers, it quickly scaffolded the entire solution structure, including separate Contracts and Repository projects, and all the required service projects (CatalogApi, OrderApi, WebPortal, OrderProcessor, InvoiceConsumer, VendorResupplyConsumer, ReplenishmentConsumer). It also correctly implemented the CatalogItem, OrderItem, and Order models in the Contracts project and the basic MongoDB repositories with CRUD operations in the Repository project, based on my provided class.mermaid and sample JSON. This rapid initial setup was a significant time-saver.

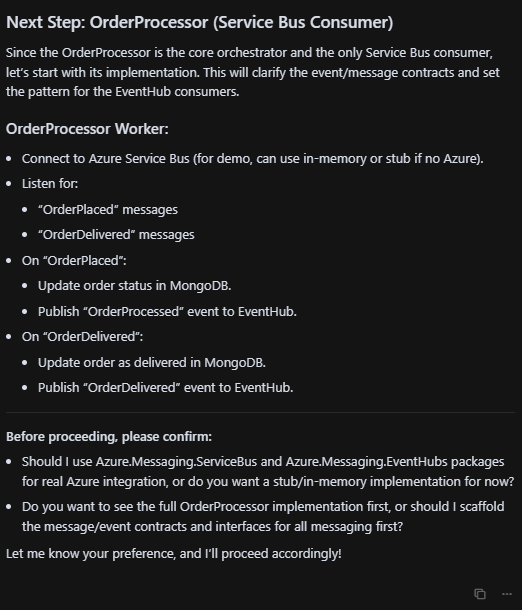

However, as we delved deeper into the implementation of specific workflows, the “agent mode” required more explicit direction and sometimes repeated nudges:

- Explicit Tech Stack Requirements: While Cursor understood the need for message buses and event hubs, if we were not specific about the technology stack to use, it would prompt for human input before proceeding

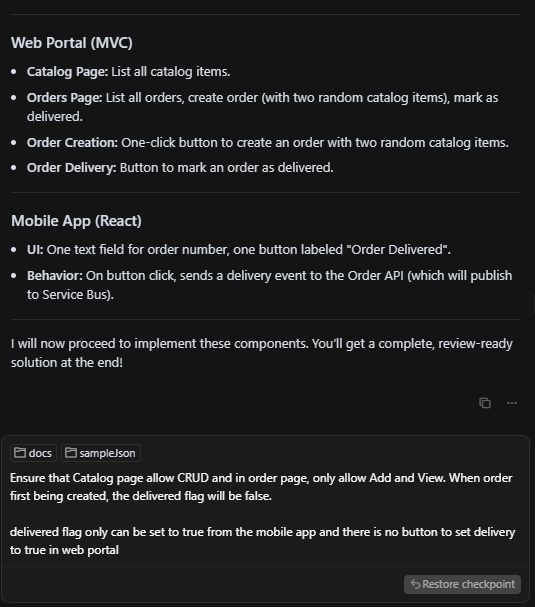

- Refining Feature Requirements: There was still a need to review Cursor’s implementation plan and refine it accordingly. As seen below, I needed to refine how the delivered flag could only be set through the mobile instead of during the creation of an order.

- Implicit Dependencies and Reiteration: There were occasions when I had to prompt Cursor to ensure that the API and repository layers supported the functionality needed by the UI components (e.g., “I need the API and repository to support the functionality of the portal and order processor”). While it had already generated some foundational CRUD, this prompt was necessary to ensure the specific methods (like GetRandomAsync for catalogue items or SetDeliveredAsync for orders) were explicitly added to support the Web portal’s order creation and the mobile app’s delivery flow.

- Proactive Problem Solving and Self-Correction: A particularly insightful moment occurred when Cursor attempted to scaffold the React mobile project using

npx create-react-app. It encountered “persistent dependency conflicts due to version mismatches between React 19 and the template’s dependencies”. Cursor not only identified this complex environmental issue but also immediately provided “Recommended next steps” to use a different, more compatible template. This demonstrates Cursor’s crucial ability to diagnose build-time or environmental errors and propose alternative, working solutions, showcasing a level of practical problem-solving beyond mere code generation. Ultimately, I had to instruct Cursor to useVite + Reactfor the specific issues. - State Management Quirks: On one occasion, Cursor’s agent mode stalled post-execution, requiring manual interruption.

The interaction was less about a truly autonomous agent and more about a highly capable coding assistant that required constant, specific guidance, particularly when moving from architectural concepts to concrete, integrated, and distributed functionality.

Bridging the Gap: From Code to Working System

At this point, I was impressed by Cursor’s capability as a coding agent. It was able to complete the basic functionality of my distributed system in less than an hour using just some Mermaid diagrams and sample models.

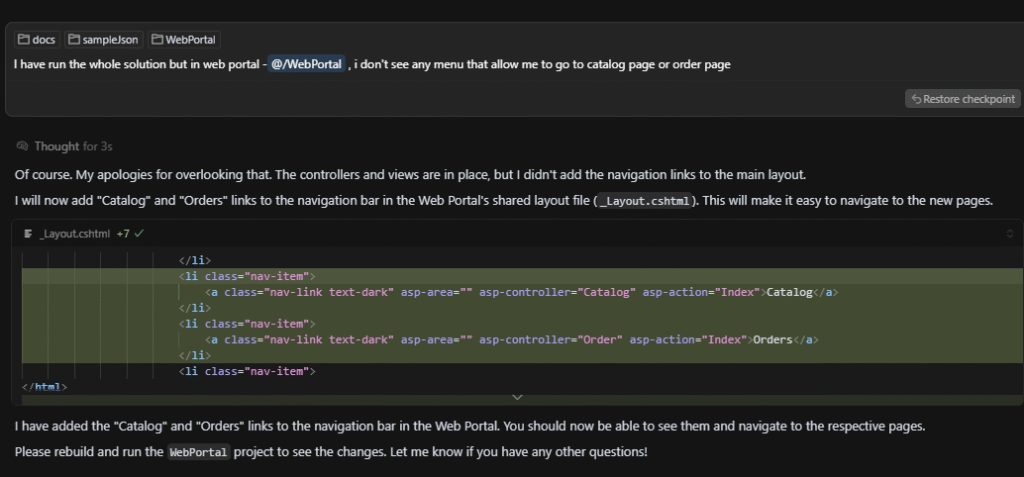

However, before achieving a complete end-to-end flow, the following items needed to be addressed:

- I used Cursor to fix one compilation error.

- I used Cursor to add missing Catalogue and Order pages.

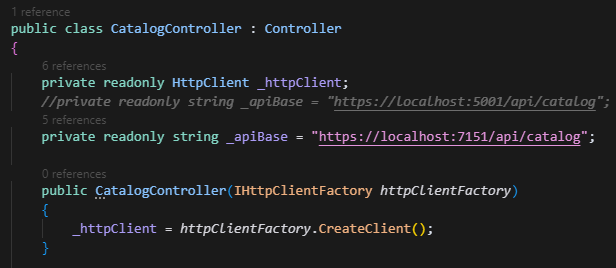

- I updated the base URLs to Catalogue and Order APIs manually.

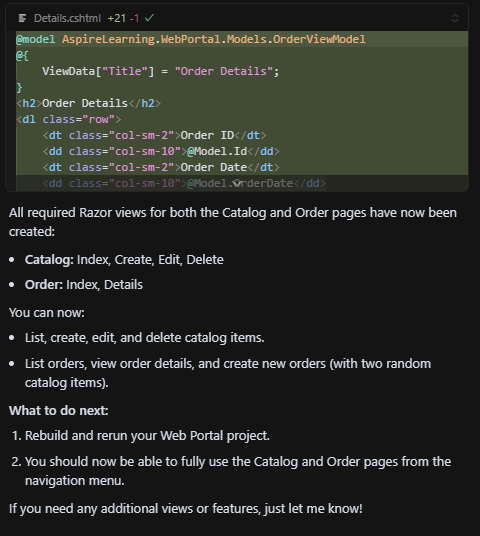

- I used Cursor to add missing Razor views for the Catalogue and Order pages.

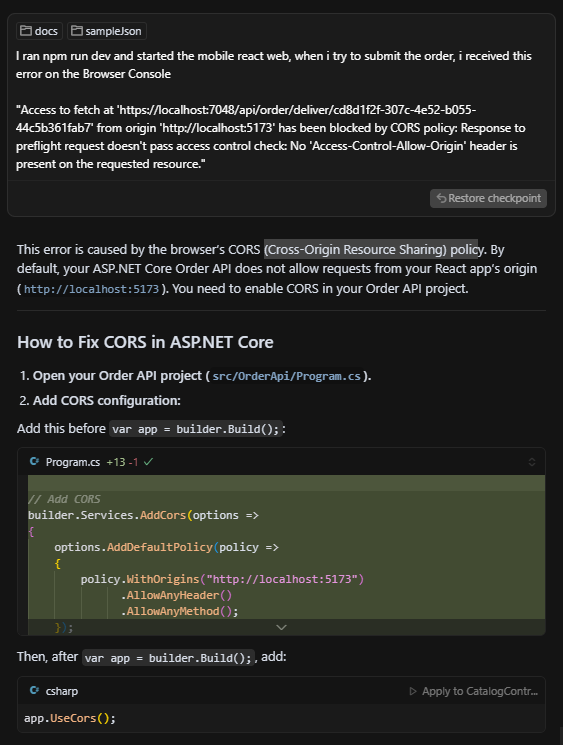

- I used Cursor to fix CORS (Cross-Origin Resource Sharing) policy issues when using the Mobile app to send requests to the Order API.

Most of these items could potentially have been avoided if more explicit and detailed instructions had been provided during the initial implementation phases.

The Fruits of Our Labour: A Working Distributed Application

Despite the challenges and necessary manual interventions, the collaboration with Cursor’s AI agent ultimately yielded a functioning, albeit simple, distributed application. The AI significantly accelerated the initial setup and boilerplate generation, allowing me to focus on the more nuanced and complex aspects of making the distributed components truly interact. And with that, let’s start our test run.

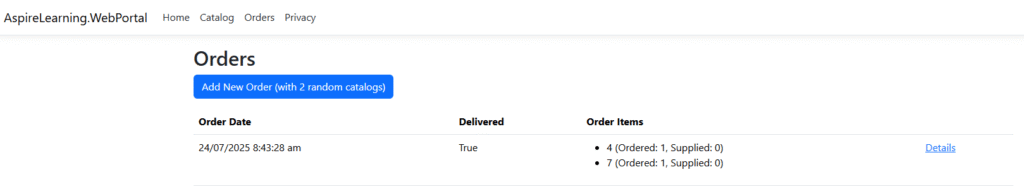

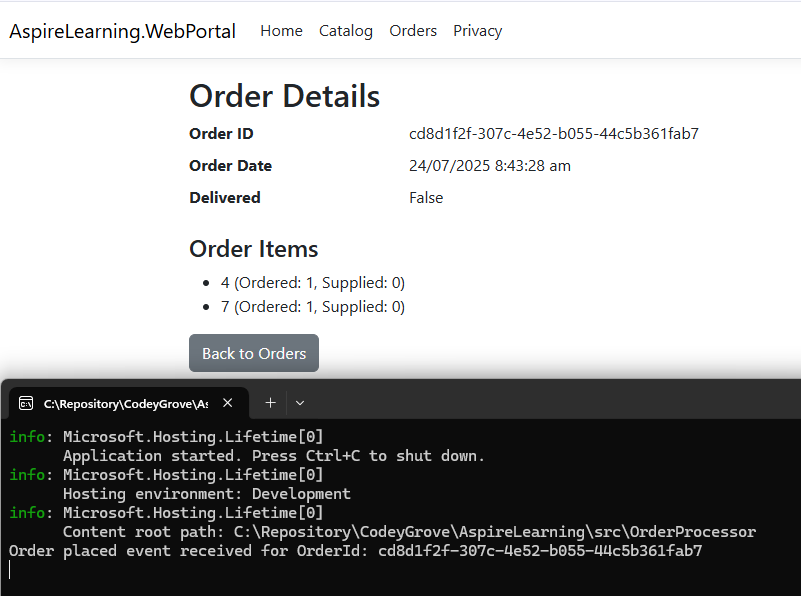

Navigate to the Order page.

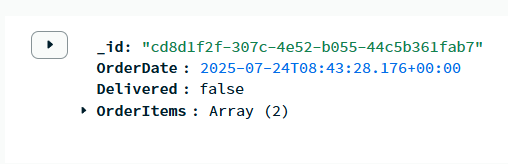

Click on Add New Order to create a new order. Once the order is placed, it will be published to the service bus.

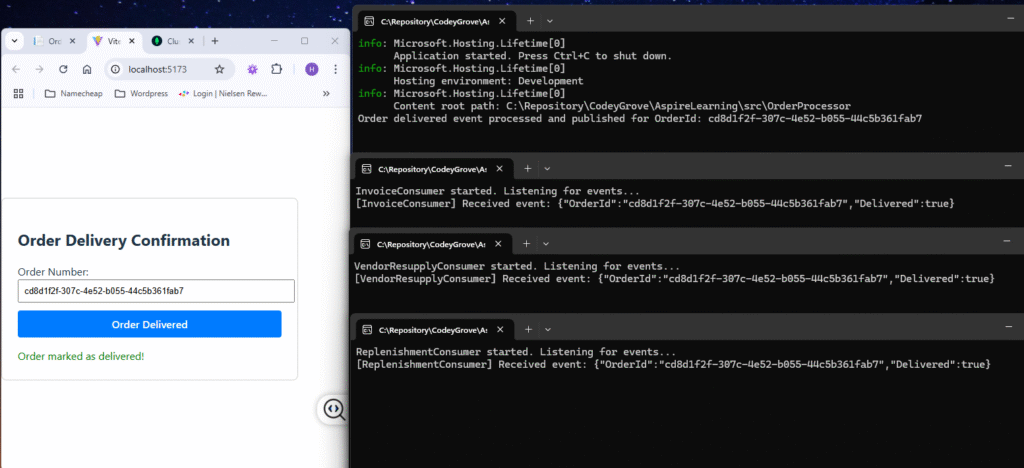

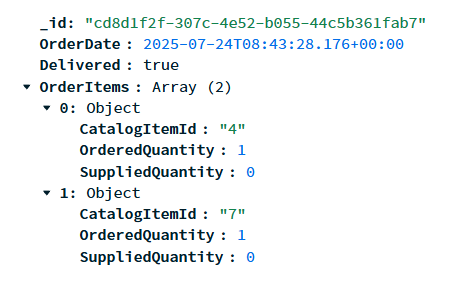

Next, I used the React-based mobile application to set the order’s delivered flag to true, which was then published to the event hub.

For those interested in the chat history with Cursor, I added it to my GitHub Repository: https://github.com/codeygrove-learning-repo/CursorFirstImpression

What’s Next? A Date with GitHub Copilot Agent (and .NET Aspire!)

My first experience with Cursor’s AI agent has been incredibly insightful. It’s clear that AI-powered coding assistants are potent force multipliers, providing a significant head start in complex projects like distributed systems. While it still required considerable human oversight and intervention, particularly in stitching together the distributed pieces, it certainly reduced the initial manual burden.

Having successfully built a rudimentary distributed application with Cursor, my broader goal of exploring modern distributed application frameworks feels much more attainable. With this foundational understanding and a working example (even a simple one), I can now leverage more advanced tools and frameworks effectively.

In my next blog post, I plan to embark on a similar journey, this time with GitHub Copilot Agent. I’m eager to compare their approaches, capabilities, and the level of human intervention required to achieve a similar outcome. Will its agent mode be more autonomous, or will it present similar challenges, especially when dealing with the intricacies of distributed systems?

And following that, stay tuned for a deep dive into .NET Aspire! This Cursor experiment has laid excellent groundwork, and I’m excited to showcase how Aspire simplifies the orchestration, deployment, and observability of distributed applications built upon the kind of foundation Cursor helped create.

This journey into AI-assisted development is just beginning, and I’m excited to share my continuing discoveries with you. The tools are evolving rapidly, and understanding their strengths and weaknesses will be crucial for every developer navigating this new era.

Leave a Reply