If you’re working in the world of Infrastructure as Code (IaC), particularly with cloud platforms, chances are you’re intimately familiar with Terraform. We rely on it to define, provision, and manage our infrastructure in a consistent and predictable manner. The dream scenario? Run terraform plan, ideally see: “No changes. Your infrastructure matches the configuration.” Any changes you do see should be the direct result of modifications you’ve intentionally made in your Terraform configuration, giving you the confidence to terraform apply.

But what happens when that dream turns into a nightmare? Well, that nightmare happened to me. Imagine this, on November 2024, I made changes to YAML pipeline configuration as part of routine maintenance. I ran the pipeline, and because there were no Terraform configuration changes, at the end of the terraform apply, the beloved “No changes. Your infrastructure matches the configuration.” message was displayed and I was greatly pleased.

Then fast forward to June 2025 – because TLS 1.0 & 1.1 support are going to be removed from Azure storage account, I updated azurerm_storage_account configuration to use the latest TLS. And I ran the pipeline to deploy the Terraform change – but instead of seeing just TLS change, I was surprised to see the following:

-/+ destroy and then create replacement

A series of "must be replaced" and "# forces replacement" messages

And .....

Plan: 12 to add, 8 to change, 12 to destroy.It was a full-blown destroy and recreate on resources that I didn’t update. So I started to review PRs from the past few months and confirm there were, indeed, no changes to the Terraform configuration. Then I downloaded the Terraform state and compared it with actual Azure resources and, again, nothing had changed. Curious, I ran the last successful pipeline (November 2024) hoping to see “No changes. Your infrastructure matches the configuration.” Sadly, “Plan: 12 to add, 8 to change, 12 to destroy.” plan was displayed instead, even with old Terraform configuration. The confusion was real. What changed? I wondered.

Our Infrastructure Landscape: Building on Existing Foundations

To understand how this seemingly impossible scenario unfolded, let’s briefly touch upon our infrastructure setup. In our Azure environment, we often deploy our application-specific resources into existing resource groups. These parent resource groups are typically managed by a central platform team — outside our application’s specific Terraform configuration.

This common pattern offers several benefits, such as centralizing resource group management and enforcing consistent tagging policies at a higher level. To reference these external resource groups within our Terraform, we leverage data blocks.

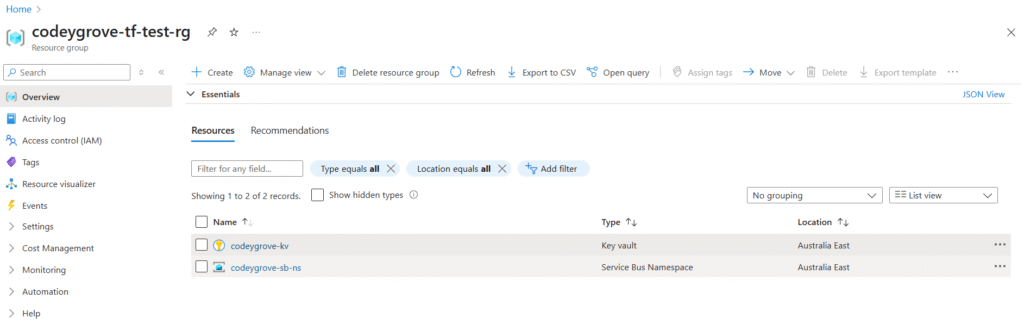

To illustrate the issue, let’s create the following resources in existing resource group called codeygrove-tf-test-rg:

- Key vault where service bus connection string will be saved as secrets

- Service bus namespace and two topics under this namespace

The following is the root main.tf:

data "azurerm_client_config" "current" {}

resource "azurerm_key_vault" "kv" {

name = "codeygrove-kv"

location = var.location

resource_group_name = var.rg_name

enabled_for_disk_encryption = false

enabled_for_deployment = true

enabled_for_template_deployment = true

tenant_id = data.azurerm_client_config.current.tenant_id

sku_name = "standard"

network_acls {

default_action = "Allow"

bypass = "AzureServices"

}

lifecycle {

ignore_changes = [purge_protection_enabled]

}

}

resource "azurerm_key_vault_access_policy" "ap2" {

key_vault_id = azurerm_key_vault.kv.id

tenant_id = data.azurerm_client_config.current.tenant_id

object_id = var.codeygrove_object_id

secret_permissions = [

"Backup",

"Delete",

"Get",

"List",

"Purge",

"Recover",

"Restore",

"Set"

]

depends_on = [ azurerm_key_vault.kv ]

}

resource "azurerm_servicebus_namespace" "codeygrove_sb_namespace" {

name = "codeygrove-sb-ns"

resource_group_name = var.rg_name

location = var.location

sku = "Standard"

}

resource "azurerm_servicebus_topic" "codeygrobe_events_topic" {

name = "codeygrove-topic"

namespace_id = azurerm_servicebus_namespace.codeygrove_sb_namespace.id

partitioning_enabled = true

lifecycle {

ignore_changes = [

partitioning_enabled,

]

}

}

resource "azurerm_servicebus_topic_authorization_rule" "codeygrove_manage_auth_rule" {

name = "codeygrove-manage-auth-rule"

topic_id = azurerm_servicebus_topic.codeygrobe_events_topic.id

listen = true

send = true

manage = true

depends_on = [ azurerm_servicebus_topic.codeygrobe_events_topic ]

}

resource "azurerm_key_vault_secret" "manage_events_connection_string" {

name = "manage-connection-string"

value = azurerm_servicebus_topic_authorization_rule.codeygrove_manage_auth_rule.primary_connection_string

key_vault_id = azurerm_key_vault.kv.id

depends_on = [ azurerm_key_vault_access_policy.ap2, azurerm_servicebus_topic_authorization_rule.codeygrove_manage_auth_rule ]

}

module "codeygrove_forwarded_topic" {

source = "./modules/forwardTopic"

rg_name = var.rg_name

namespace_name = azurerm_servicebus_namespace.codeygrove_sb_namespace.name

from_topic = "codeygrove-topic"

to_topic = "other-topic"

depends_on = [azurerm_servicebus_topic.codeygrobe_events_topic]

}

resource "azurerm_key_vault_secret" "another_topic_events_connection_string" {

name = "another-topic-manage-connection-string"

value = module.codeygrove_forwarded_topic.topic_connection_string

key_vault_id = azurerm_key_vault.kv.id

depends_on = [module.codeygrove_forwarded_topic, azurerm_key_vault_access_policy.ap2]

}Next is the module main.tf to create the second topic and also a subscription to forward from first to second topic. A key point in the configuration is the use of Terraform data source. They are used to create the second topic and forwarding subscription. In a real-world scenario, the same module is also used to create forwarding subscriptions and topics in another namespace.

# Use data block to get reference to service bus namespace

data "azurerm_servicebus_namespace" "sb_namespace" {

name = var.namespace_name

resource_group_name = var.rg_name

}

# Use data block to get reference to source topic

data "azurerm_servicebus_topic" "source_topic" {

name = var.from_topic

namespace_id = data.azurerm_servicebus_namespace.sb_namespace.id

}

resource "azurerm_servicebus_topic" "destination_topic" {

name = var.to_topic

# Service bus namespace reference from data block is used for destination_topic namespace id

namespace_id = data.azurerm_servicebus_namespace.sb_namespace.id

batched_operations_enabled = false

express_enabled = false

requires_duplicate_detection = false

support_ordering = false

lifecycle {

ignore_changes = [

partitioning_enabled,

]

}

}

resource "azurerm_servicebus_subscription" "forward_to_destination_subscription" {

name = "forward-to-destination-subs"

# Source topic reference from data block is used to create forward subscription

topic_id = data.azurerm_servicebus_topic.source_topic.id

max_delivery_count = 10

forward_to = azurerm_servicebus_topic.destination_topic.name

batched_operations_enabled = false

dead_lettering_on_message_expiration = false

requires_session = false

depends_on = [ azurerm_servicebus_topic.destination_topic ]

}

resource "azurerm_servicebus_topic_authorization_rule" "forward_to_destination_auth_rule" {

name = "forward-to-destination-auth-rule"

topic_id = azurerm_servicebus_topic.destination_topic.id

listen = true

send = true

manage = true

depends_on = [ azurerm_servicebus_topic.destination_topic ]

}We run terraform plan & apply through the pipeline and 10 resources are added. (For the purpose of this demo, I am running it locally)

Apply complete! Resources: 10 added, 0 changed, 0 destroyed.Happy with the result, we re-run the pipeline again, just to check the plan. (For the purpose of this demo, I am running it locally)

No changes. Your infrastructure matches the configuration.As expected, there were no changes to our infrastructure. Everyone was happy and we all could sleep soundly that night.

However, not everyone slept well that night because the cost of Azure resources had increased and we had no idea how to allocate this cost properly. Someone needed to figure out a way to consolidate information about ownership for all these resources and allocate them to the right cost center. That someone came across an article about tag governance and how to group and allocate costs using tag inheritance.

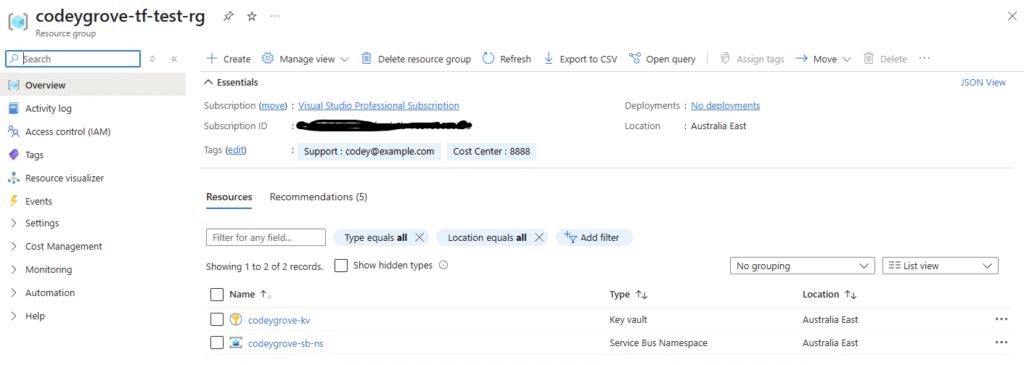

In the next few months, platform team started to gather information about the ownership of each resource group. And they decided to add Cost Center and Support tags to each resource group and use them to charge the usage cost to the correct department and assign teams responsible for monitoring this cost. That wasn’t the end of it — the platform team also needed to ensure the same tags were applied to all resources under the group. Hence, Azure Policy was created to enforce tag governance and remediation tasks were created and actioned upon. The following is the resource in Azure

The Plot Twist: When Data Blocks Trigger Replacements

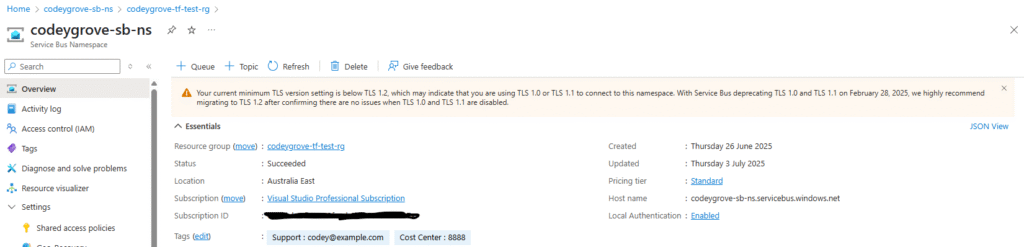

Fast forward few months and we receive the TLS news. To ensure compliance, we update our Terraform configuration on root main.tf as follow:

resource "azurerm_servicebus_namespace" "codeygrove_sb_namespace" {

name = "codeygrove-sb-ns"

resource_group_name = var.rg_name

location = var.location

sku = "Standard"

minimum_tls_version = "1.2" # we added this line to ensure compliance

}We kicked off our pipeline, confident the plan would show: ‘Plan: 0 to add, 1 to change, 0 to destroy’. To our surprise, this was the output from terraform plan:

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

~ update in-place

-/+ destroy and then create replacement

<= read (data resources)

Terraform will perform the following actions:

# azurerm_key_vault.kv will be updated in-place

~ resource "azurerm_key_vault" "kv" {

id = "/subscriptions/<azure_subscription_id>/resourceGroups/codeygrove-tf-test-rg/providers/Microsoft.KeyVault/vaults/codeygrove-kv"

name = "codeygrove-kv"

~ tags = {

- "Cost Center" = "8888" -> null

- "Support" = "codey@example.com" -> null

}

# (13 unchanged attributes hidden)

# (1 unchanged block hidden)

}

# azurerm_key_vault_secret.another_topic_events_connection_string will be updated in-place

~ resource "azurerm_key_vault_secret" "another_topic_events_connection_string" {

id = "https://codeygrove-kv.vault.azure.net/secrets/another-topic-manage-connection-string/6fd3704e424642dba3bf610d0af591de"

name = "another-topic-manage-connection-string"

tags = {}

~ value = (sensitive value)

# (8 unchanged attributes hidden)

}

# azurerm_servicebus_namespace.codeygrove_sb_namespace will be updated in-place

~ resource "azurerm_servicebus_namespace" "codeygrove_sb_namespace" {

id = "/subscriptions/<azure_subscription_id>/resourceGroups/codeygrove-tf-test-rg/providers/Microsoft.ServiceBus/namespaces/codeygrove-sb-ns"

~ minimum_tls_version = "1.1" -> "1.2" # THIS IS THE ONLY THING I EXPECT TO SEE

name = "codeygrove-sb-ns"

~ tags = {

- "Cost Center" = "8888" -> null

- "Support" = "codey@example.com" -> null

}

# (12 unchanged attributes hidden)

# (1 unchanged block hidden)

}

# module.codeygrove_forwarded_topic.data.azurerm_servicebus_namespace.sb_namespace will be read during apply

# (depends on a resource or a module with changes pending)

<= data "azurerm_servicebus_namespace" "sb_namespace" {

+ capacity = (known after apply)

+ default_primary_connection_string = (sensitive value)

+ default_primary_key = (sensitive value)

+ default_secondary_connection_string = (sensitive value)

+ default_secondary_key = (sensitive value)

+ endpoint = (known after apply)

+ id = (known after apply)

+ location = (known after apply)

+ name = "codeygrove-sb-ns"

+ premium_messaging_partitions = (known after apply)

+ resource_group_name = "codeygrove-tf-test-rg"

+ sku = (known after apply)

+ tags = (known after apply)

}

# module.codeygrove_forwarded_topic.data.azurerm_servicebus_topic.source_topic will be read during apply

# (config refers to values not yet known)

<= data "azurerm_servicebus_topic" "source_topic" {

+ auto_delete_on_idle = (known after apply)

+ batched_operations_enabled = (known after apply)

+ default_message_ttl = (known after apply)

+ duplicate_detection_history_time_window = (known after apply)

+ enable_batched_operations = (known after apply)

+ enable_express = (known after apply)

+ enable_partitioning = (known after apply)

+ express_enabled = (known after apply)

+ id = (known after apply)

+ max_size_in_megabytes = (known after apply)

+ name = "codeygrove-topic"

+ namespace_id = (known after apply)

+ partitioning_enabled = (known after apply)

+ requires_duplicate_detection = (known after apply)

+ status = (known after apply)

+ support_ordering = (known after apply)

}

# module.codeygrove_forwarded_topic.azurerm_servicebus_subscription.forward_to_destination_subscription must be replaced

-/+ resource "azurerm_servicebus_subscription" "forward_to_destination_subscription" {

~ id = "/subscriptions/<azure_subscription_id>/resourceGroups/codeygrove-tf-test-rg/providers/Microsoft.ServiceBus/namespaces/codeygrove-sb-ns/topics/codeygrove-topic/subscriptions/forward-to-destination-subs" -> (known after apply)

name = "forward-to-destination-subs"

~ topic_id = "/subscriptions/<azure_subscription_id>/resourceGroups/codeygrove-tf-test-rg/providers/Microsoft.ServiceBus/namespaces/codeygrove-sb-ns/topics/codeygrove-topic" -> (known after apply) # forces replacement

# (12 unchanged attributes hidden)

}

# module.codeygrove_forwarded_topic.azurerm_servicebus_topic.destination_topic must be replaced

-/+ resource "azurerm_servicebus_topic" "destination_topic" {

~ id = "/subscriptions/<azure_subscription_id>/resourceGroups/codeygrove-tf-test-rg/providers/Microsoft.ServiceBus/namespaces/codeygrove-sb-ns/topics/other-topic" -> (known after apply)

~ max_message_size_in_kilobytes = 256 -> (known after apply)

~ max_size_in_megabytes = 5120 -> (known after apply)

name = "other-topic"

~ namespace_id = "/subscriptions/<azure_subscription_id>/resourceGroups/codeygrove-tf-test-rg/providers/Microsoft.ServiceBus/namespaces/codeygrove-sb-ns" -> (known after apply) # forces replacement

- partitioning_enabled = false -> null

# (8 unchanged attributes hidden)

}

# module.codeygrove_forwarded_topic.azurerm_servicebus_topic_authorization_rule.forward_to_destination_auth_rule must be replaced

-/+ resource "azurerm_servicebus_topic_authorization_rule" "forward_to_destination_auth_rule" {

~ id = "/subscriptions/<azure_subscription_id>/resourceGroups/codeygrove-tf-test-rg/providers/Microsoft.ServiceBus/namespaces/codeygrove-sb-ns/topics/other-topic/authorizationRules/forward-to-destination-auth-rule" -> (known after apply)

name = "forward-to-destination-auth-rule"

~ primary_connection_string = (sensitive value)

+ primary_connection_string_alias = (sensitive value)

~ primary_key = (sensitive value)

~ secondary_connection_string = (sensitive value)

+ secondary_connection_string_alias = (sensitive value)

~ secondary_key = (sensitive value)

~ topic_id = "/subscriptions/<azure_subscription_id>/resourceGroups/codeygrove-tf-test-rg/providers/Microsoft.ServiceBus/namespaces/codeygrove-sb-ns/topics/other-topic" -> (known after apply) # forces replacement

# (3 unchanged attributes hidden)

}

Plan: 3 to add, 3 to change, 3 to destroy.As someone not an expert in Terraform, seeing this plan was extremely frustrating, especially since I hadn’t updated the Terraform configuration in a while. My mind started to wander, “Who updated our application resources?”, “Did we make Terraform changes but fail to deploy them?”. Nonetheless, I knew I didn’t have time to assume and needed to focus on the plan itself. So a few things in the plan attracted my attention:

- The tags changes are set to null on the service bus namespace and key vault

- The “must be replaced” statement

- And the “(known after apply) # forces replacement” statement

Let’s start with the tags, so we tried both options below and re-run the plan and get “Plan: 3 to add, 2 to change, 3 to destroy.”

# Option 1 - Add ignore_changes to both resources

lifecycle { ignore_changes = [tags] }

# Option 2 -Add the tags to Terraform config

tags = {

"Cost Center" = "8888"

"Support" = "codey@example.com"

}This eliminated one change, as the Key Vault configuration now matched the actual resource. Unfortunately, some resources are still going to be replaced. Hence, we move to our next troubleshooting step.

Next, we checked our provider.tf and we fixed the terraform and azurerm version to the same version that was used when the plan was showing “No Changes”. Below is provider.tf before we updated it. After running terraform plan, the output was still the same “Plan: 3 to add, 2 to change, 3 to destroy.”.

terraform {

required_version = ">= 0.12" # Before fix

required_providers {

azurerm = {

source = "registry.terraform.io/hashicorp/azurerm"

version = ">= 2.0" # Before fix

}

}

}Since fixing the version did not resolve the issue, we shifted our attention to “must be replaced” and “(known after apply) # forces replacement“. First, we used refresh-only mode to sync the Terraform state file. Once that was done, we compared the terraform.tfstate with the actual azure resources, specifically those properties with “(known after apply)” (connection_string, namespace_id and topic_id). Nothing seemed to change but the plan still generated the same message “Plan: 3 to add, 2 to change, 3 to destroy.”

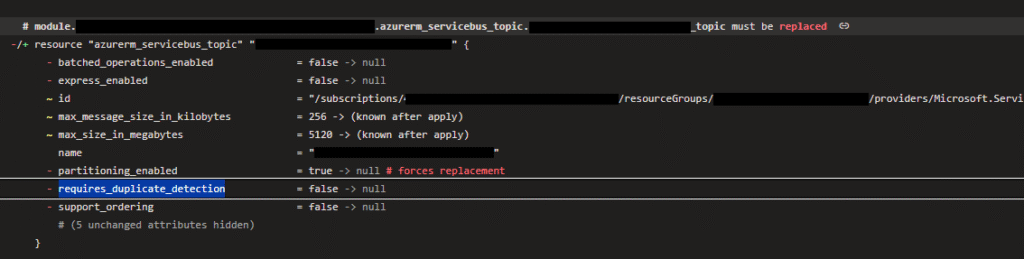

We decided to give the plan another look. This time, our focus is on “# forces replacement“. We screened through the plan and “# forces replacement” seems to re-appear mostly in namespace_id and topic_id. Both of them are referenced from the data block and in the plan, there is “<= read” change which did not exist in last successful pipeline. Certainly, there was a resource drift, but we couldn’t seem to see anything after comparing the state file.

Hence, we decided to reference the namespace and topic as managed resource instead of data block. These are the changes we made on main.tf in the module:

# Module main.tf

# Remove away both data block

resource "azurerm_servicebus_topic" "destination_topic" {

name = var.to_topic

# Pass in the namespace_id as variable from the resource block from root main.tf

namespace_id = var.input_namespace_id

# Existing configuration ......................

}

resource "azurerm_servicebus_subscription" "forward_to_destination_subscription" {

name = "forward-to-destination-subs"

# Pass in the source topic_id as variable from the resource block from root main.tf

topic_id = var.input_topic_id

# Existing configuration ......................

}

# Existing configuration ......................And this is the changes we made on the root main.tf

module "codeygrove_forwarded_topic" {

source = "./modules/forwardTopic"

# reference the namespace resource

input_namespace_id = azurerm_servicebus_namespace.codeygrove_sb_namespace.id

# reference the topic resource

input_topic_id = azurerm_servicebus_topic.codeygrobe_events_topic.id

to_topic = "other-topic"

depends_on = [azurerm_servicebus_topic.codeygrobe_events_topic]

}And finally, we were happy with the terraform plan output and everyone could sleep soundly that night.

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# azurerm_servicebus_namespace.codeygrove_sb_namespace will be updated in-place

~ resource "azurerm_servicebus_namespace" "codeygrove_sb_namespace" {

id = "/subscriptions/<azure-subscription-id>/resourceGroups/codeygrove-tf-test-rg/providers/Microsoft.ServiceBus/namespaces/codeygrove-sb-ns"

~ minimum_tls_version = "1.1" -> "1.2"

name = "codeygrove-sb-ns"

tags = {

"Cost Center" = "8888"

"Support" = "codey@example.com"

}

# (12 unchanged attributes hidden)

# (1 unchanged block hidden)

}

Plan: 0 to add, 1 to change, 0 to destroy.The deeper connection to the platform team’s tag inheritance strategy for cost management wasn’t immediately apparent. Even after we solved the “force replacement” dilemma, the underlying “resource drift” remained unexplained. The true “aha!” moment occurred much later, during my preparation for this very article. I specifically replicated the issue by applying a tag inheritance policy to a test resource group. This pointed directly to the Azure Policy remediation tasks we’d previously identified—tasks designed to ensure resources inherit tags from their parent. This policy enforcement was the reason our Terraform plan showed existing tags being set to null, despite no such configuration in our code.

During my testing, I also discovered that, without a tag inheritance policy configured, adding tags to the resource group would show no change in the terraform plan. Hence, it remains a mystery to me as to why terraform plan refreshed the data source only when the tag inheritance policy was in place.

Terraform ForceNew Schema Behaviour

Since we are in the topic of “force replacement”, I want to share another common source of unexpected replacements. This is Terraform’s ForceNew schema behaviour. When a provider resource’s field has its ForceNew behaviour set to true in its schema, it indicates that the resource will be destroyed and recreated whenever this specific resource’s field value is modified.

Let’s go through this example where Terraform configuration was not modified, but we upgraded the azurerm provider from version 3.x to 4.x. In version 4.x of azurerm, when certain properties were not provided, azurerm will assign null to the existing property.

data "azurerm_servicebus_namespace" "sb_namespace" {

name = var.namespace_name

resource_group_name = var.rg_name

}

# Create new topic

resource "azurerm_servicebus_topic" "new_topic" {

name = var.new_topic_name

namespace_id = data.azurerm_servicebus_namespace.sb_namespace.id

}When Terraform plan is generated, Terraform will destroy and recreate the azure_servicebus_topic resource. That is because the schema behaviour of partitioning_enabled field in azurerm provider has its ForceNew behaviour set to true (see: https://github.com/hashicorp/terraform-provider-azurerm/blob/85c4cd6c33447ec17db4d70646d0900820cd800a/internal/services/servicebus/servicebus_topic_resource.go#L105). If we are not planning to configure such properties through Terraform, we can add them to the lifecycle ignore_changes rule.

Conclusion

Ultimately, this “Terraform nightmare” highlighted a crucial lesson. While data blocks offer a convenient way to reference existing infrastructure, they come with a hidden cost: implicit dependencies on external states. Even minor, unmanaged changes in those external resources can ripple through your data blocks, leading to unexpected and disruptive resource replacements. Moving forward, my firm recommendation is this: whenever a managed resource can be referenced within a module, opt for that direct connection rather than relying on a data block. Following this simple principle can prevent many hours of frustrating troubleshooting.

Leave a Reply